What “scaling” really means

Scaling is making sure your product still feels fast and reliable when more people use it. Keep pages quick, checkouts smooth, and bills under control as you grow.

How to know it’s time

- Pages feel slower at peak hours. Users notice first. Believe them.

- Errors spike during launches or campaigns. That’s your system saying “I’m full.”

- Database looks busy all the time. High CPU or slow queries = a bottleneck.

The five moves that help most

Start small. Each move below is simple, high-impact, and safe to roll out in stages.

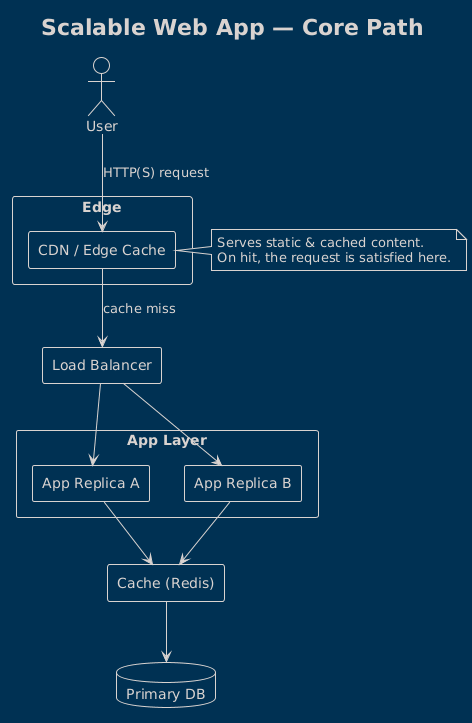

1) Cache what you can

Keep the results of common requests in fast memory (like Redis) so you don’t redo the same work. Begin with your top two read endpoints or your homepage.

- Win: Big speed boost with little code.

- Tip: Set short timeouts (1–5 minutes) and add a small random jitter to avoid stampedes.

2) Put a CDN in front

Serve images, CSS, JS, and even some HTML from the edge. Your origin does less; users load faster—especially far from your server.

- Win: Faster global loads, lower server cost.

- Tip: Cache anonymous pages, bust cache on deploy.

3) Make slow work asynchronous

Don’t do heavy tasks during the request. Put them on a queue (emails, image processing, imports) and answer the user right away.

- Win: Fewer timeouts, happier users.

- Tip: Make workers idempotent so retries don’t double-charge or double-send.

4) Add more app replicas

Run a few copies of your app and spread traffic across them. Keep sessions out of memory (use cookies, JWT, or a shared store) so any replica can serve any user.

- Win: Handles spikes without code changes.

- Tip: Start with 2–3 replicas and basic autoscaling.

5) Ease pressure on the database

Fix slow queries, add the right indexes, and send heavy reports to a read replica. Sharding can wait until data truly demands it.

- Win: Big stability gains for little effort.

- Tip: Measure your top 10 queries; fix those first.

A simple rollout plan (four steps)

- Step 1 — See the truth: Set basic dashboards for latency, error rate, and database load. Pick one “golden path” (e.g., product → cart → pay) and track it end to end.

- Step 2 — Quick wins: Turn on CDN for static assets and cache your hottest endpoint. Re-test the golden path.

- Step 3 — Cut the wait: Move slow tasks to a queue. Add one more app replica. Re-test at 2× your current peak.

- Step 4 — Make it stick: Fix the top slow queries, add autoscaling limits, and set alerts on p95 latency and cost.

Common mistakes (and easy fixes)

- Too many microservices too soon. Start with a tidy monolith; split only hot spots.

- One giant cache for everything. Use small, purposeful caches with clear timeouts.

- Doing heavy work inside requests. If it might take seconds, put it on a queue.

- Guessing instead of testing. Run a short load test before and after each change.

What to measure (keep it light)

- Latency: Aim for p95 under 200 ms on your key read routes.

- Error rate: Keep it below 1% during peak.

- Cache hit rate: Push past 80% for anonymous traffic.

- Cost per 1,000 requests: Track this monthly so growth doesn’t surprise you.

When you might need “advanced” moves

If reads dwarf writes and you’re still slow, add read replicas. If one table grows faster than everything else, consider partitioning. If a few tenants are “noisy,” place them on their own database. These are normal milestones, not day-one requirements.

Conclusion: scale slowly, win quickly

Start with caching and a CDN, move heavy work off the request, add a couple of replicas, and fix the obvious database slowdowns. Measure, repeat, and only add complexity when the data proves you need it. Simple steps—done in order—beat big rewrites every time.