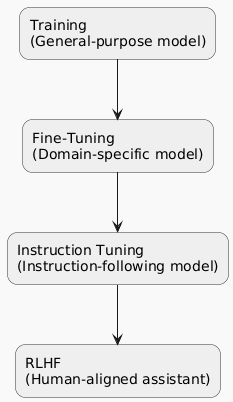

Training: The Blank-Slate Bootcamp

At the beginning, an LLM is basically a big calculator with random knobs turned every which way. Training is where those knobs get tuned. We shove the model an ocean of text (yes, scraped from the internet, so it has seen your embarrassing forum posts) and tell it: “Guess the next word.”

Do this billions of times, and voilà: it starts to understand how words and ideas connect. At this stage, the model is a generalist. It knows a bit about everything, but it’s not going to diagnose your MRI scan or draft your tax return.

Fine-Tuning: Picking a Major

Once the foundation is solid, fine-tuning is where a model gets career-ready. Instead of “all text ever,” we feed it a smaller, highly focused dataset. Want to understand medical imaging? Toss it a library of X-rays. Need it to summarize legal briefs? Hand it case law.

The idea: take the jack-of-all-trades brain and polish it into something that actually helps in the real world.

Instruction Tuning: Learning to Take Orders

Here’s where the model stops being an annoying roommate and starts acting like a decent assistant. Instruction tuning teaches it to recognize when a user says things like “Summarize this” or “Write me an email,” and to respond accordingly instead of just continuing your sentence like an improv partner who won’t stop.

Think of it as the difference between a parrot repeating back words and a helpful friend who knows when you’re asking for advice versus when you’re telling a story.

RLHF: The Politeness Coach

Finally, there’s RLHF (Reinforcement Learning with Human Feedback). This is where humans come in and rank model outputs: “This answer is helpful, this one’s weird, and this one sounds like it wants to start Skynet.” The model then learns to prefer the answers humans like best.

The payoff? The model learns not just facts, but also values—like being polite, refusing shady requests, or not giving cooking tips that double as chemistry experiments.

Why It Matters

- Training = general knowledge of language.

- Fine-tuning = specialized skills.

- Instruction tuning = responsiveness to user intent.

- RLHF = alignment with human values.

For businesses, researchers, or hospitals trying to use AI responsibly, knowing these steps is like reading the ingredients before you serve the dish. Training from scratch is insanely expensive, but the follow-up steps—fine-tuning, instruction tuning, and RLHF—make it possible to adapt these huge brains into useful, safe, and reliable tools.